Marketing Services Agencies: Compare Offers Quickly

01/20/2026

Comparing proposals from marketing services agencies can feel impossible because each offer is written in a different “language.” One focuses on channels, another on deliverables, another on outcomes, and almost all of them gloss over the operational details that decide whether you actually launch on time.

The fastest way to compare offers is to normalize every proposal into the same format, then score only what predicts results: fit, measurement, operating rigor, and risk.

Start with a one-paragraph “win definition” (so offers become comparable)

Before you look at pricing, write a short win definition you can copy into an email to every agency. This is the reference point that prevents you from choosing the best writer instead of the best operator.

Your win definition should clarify:

- Goal (what business outcome changes): pipeline, revenue, qualified leads, CAC, retention, bookings.

- Scope (what’s included): paid media, SEO, lifecycle/email, creative, landing pages, analytics.

- Constraints (what’s true in the real world): budget range, internal approvals, compliance, current tech stack.

- Timeline (when you need first signal and first meaningful result): 2 weeks, 30 days, quarter.

- Definition of done for the first 30 days: tracking verified, campaigns live, reporting cadence established.

If you already have 3 to 6 proposals, you can still do this. Your win definition becomes the filter you use to remove “nice to have” items that inflate scope.

Convert each proposal into the same “Offer Card” (10 minutes per agency)

Most proposals are hard to compare because they blend strategy, fluff, and pricing. Fix that by rewriting each offer into a single page with the same sections.

Use the table below as your normalization template.

| Offer Card section | What to extract from their proposal | What “good” looks like |

|---|---|---|

| Target customer and positioning | ICP, segments, offer angle, key messages | Specific, testable, aligned with your sales motion |

| Channels and why | Paid search, paid social, SEO, email, content, partnerships | Clear rationale tied to funnel and budget realities |

| Deliverables | Ads, landing pages, creative, content, reporting, experiments | Countable outputs and what is explicitly not included |

| Measurement plan | KPIs, attribution approach, tracking stack, QA process | A verification sprint and clarity on what gets instrumented |

| Operating cadence | Meetings, response times, approvals, reporting | A cadence that matches your decision speed |

| Team and ownership | Who does strategy, execution, creative, analytics | Named roles, clear accountability, no “everyone does everything” |

| Onboarding requirements | Access needed, asset IDs, timeline to launch | A documented onboarding process with an SLA |

| Commercial terms | Retainer, setup fees, minimum term, add-ons | Transparent, with assumptions and change control |

| Risks and dependencies | What they need from you, what can block results | Honest risk list and mitigation plan |

Once every agency is reduced to the same Offer Card, gaps become obvious. If one proposal cannot be translated into this format, that is a signal.

Score agencies on what actually predicts execution (not pitch quality)

Now that offers are normalized, use a simple scoring model. Keep it lightweight so you can finish the comparison quickly, but structured enough to defend the decision.

Here is a practical scorecard you can use in a spreadsheet.

| Category | What to look for | Typical questions to ask | Score (1 to 5) |

|---|---|---|---|

| Strategy fit | They understand your market and constraints | “What would you not do in the first 30 days, and why?” | |

| Plan quality | Specific actions, sequencing, and decision points | “What happens in week 1, week 2, week 3?” | |

| Measurement rigor | Clear tracking ownership and QA | “How do you verify conversion tracking before spend scales?” | |

| Operating model | Cadence, responsiveness, approvals, handoffs | “Who owns what, and how do you prevent work from stalling?” | |

| Governance and security | Least-privilege access, auditability, offboarding | “How do you request access without password sharing?” | |

| Economics and clarity | Transparent assumptions and change control | “What is included, what is billable, and what triggers a change order?” |

A note on governance: if an agency hand-waves access with “just add us as admin everywhere,” treat that as a serious red flag. The principle of least privilege is a standard security control (see NIST’s discussion of least privilege in SP 800-53, AC-6).

Compare pricing without getting fooled by “cheap retainers”

Two offers with the same monthly retainer can have completely different total costs once you include creative, tooling, landing pages, tracking work, and meeting load. The fastest way to compare pricing is to estimate a simple total monthly cost and list assumptions.

| Cost component | What to capture | Why it matters |

|---|---|---|

| Monthly retainer | Fixed monthly fee | Baseline cost, but rarely the full cost |

| One-time setup | Onboarding, tracking, initial creative build | Some agencies front-load work, others spread it |

| Creative and production | Design, video, copy, UGC, landing pages | Often the real driver of performance and cost |

| Media management | Included or percentage of ad spend | Can become expensive as spend scales |

| Tools and data | Reporting, attribution, call tracking, enrichment | Hidden line items can add up quickly |

| “Reasonable requests” | Any vague bucket | This is where scope creep hides |

When you see a very low retainer, ask what gets deprioritized: creative volume, experimentation cadence, senior oversight, or measurement QA.

Use onboarding speed as a tie-breaker (it predicts time-to-value)

If two agencies look comparable on strategy and price, onboarding is usually the deciding factor because it affects how quickly you get signal.

Ask each agency to commit to an onboarding SLA in plain English.

| Onboarding checkpoint | What you want to hear | What to avoid |

|---|---|---|

| Access request method | Partner access, role-based permissions, documented steps | Password sharing, “make us admin everywhere” |

| Time to verified access | A specific SLA and a verification checklist | “It depends,” with no process |

| Tracking verification | QA steps before launch | Launching first, troubleshooting later |

| Asset organization | Where IDs, pixels, domains, catalogs live | Important details scattered across email threads |

| Offboarding | A defined process to remove access cleanly | No offboarding plan |

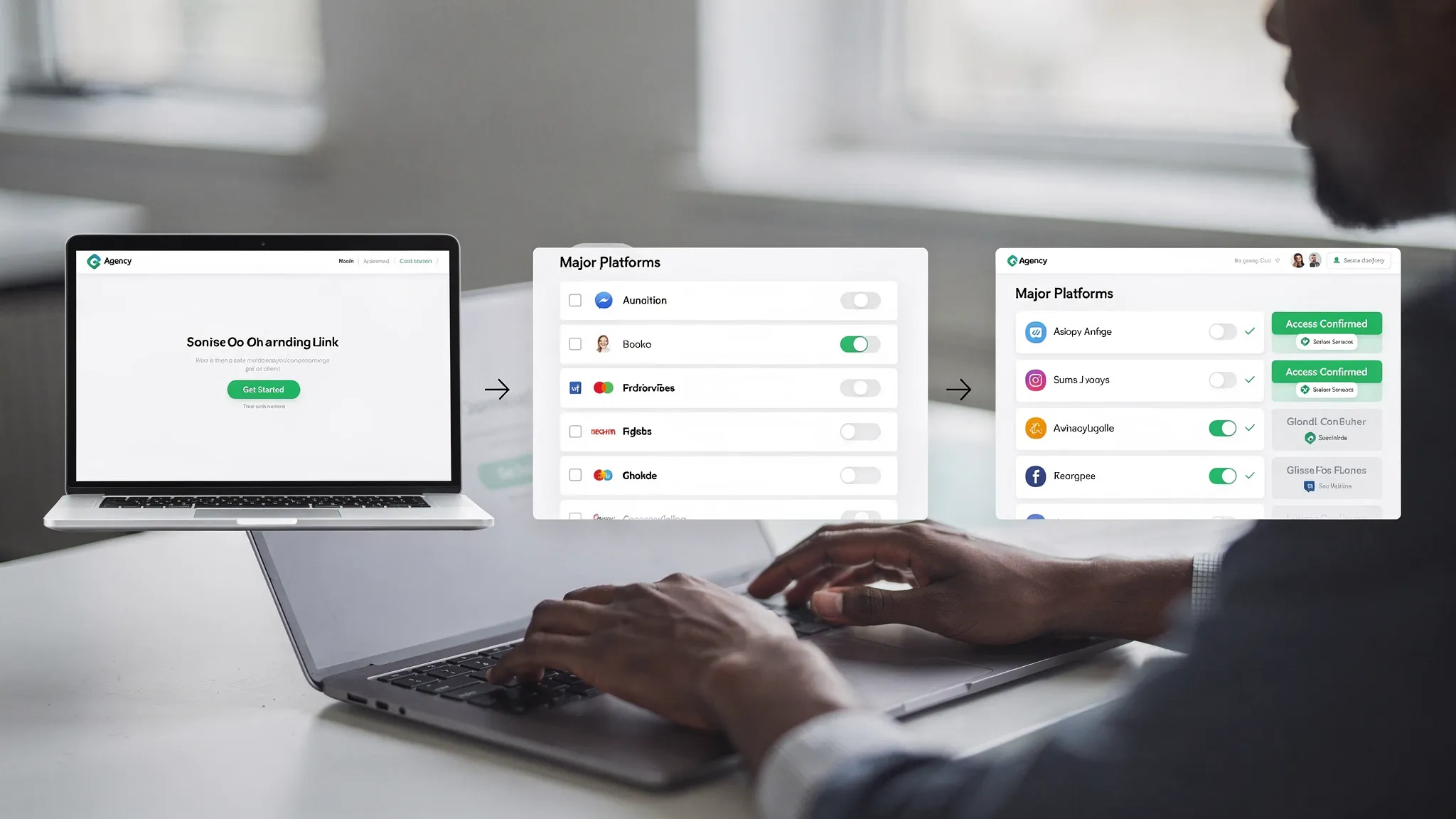

In practice, onboarding breaks when access requests are manual, spread across multiple platforms, and require back-and-forth with several client stakeholders.

If your team wants to reduce that friction, you can centralize client access setup through a single onboarding link. Connexify is built for this specific problem: it provides one-link client onboarding with a branded experience, supports multiple platforms, allows customizable permissions, and offers white-label options plus API and webhook integrations. You can learn how it works on the Connexify homepage or evaluate the broader process in this guide on how digital marketing agencies streamline client onboarding.

Run two “stress tests” to expose weak offers quickly

Most proposal comparisons fail because they evaluate the happy path only. Use two quick stress tests that reveal operational maturity.

Stress test 1: The “access delay” scenario

Ask: “If access is delayed by 7 days because the client admin is unavailable, what do you do in parallel to keep momentum?”

Strong agencies will describe parallel work (audits, creative prep, landing page drafts, tracking plan, measurement specs) and a clear escalation path.

Stress test 2: The “prove it in 30 days” plan

Ask: “What can you realistically prove in 30 days, and what can you not prove yet?”

A credible answer separates leading indicators (tracking health, creative testing velocity, CTR, CVR trends, sales feedback loops) from lagging outcomes (pipeline and revenue), without making guarantees they cannot control.

Make the final decision with a one-page decision brief

To compare marketing services agencies quickly and fairly, summarize the decision in a single page. This reduces second-guessing and aligns stakeholders.

Include:

- Your win definition

- The top 2 options and why they win

- Key assumptions (budget, internal resources, decision speed)

- The onboarding SLA you expect

- The first 30-day deliverables and check-ins

- The risks you accept and how you will monitor them

If you need language for scopes and SLAs, it can help to reference a structured framework like the one in Online Marketing Service: Scope, Pricing, and SLAs, then adapt it to your situation.

A faster, lower-risk option: propose a paid pilot with exit criteria

When offers are close, a short paid pilot is often the quickest path to clarity. The key is to define exit criteria so the pilot is not just “a smaller retainer.”

A good pilot focuses on:

- Measurement verification and reporting baseline

- A small number of channel tests tied to your win definition

- A clear operating cadence and decision rhythm

- A go or no-go decision at the end based on evidence

This approach is especially effective when multiple stakeholders need to buy in, because you are comparing execution, not decks.

Where Connexify fits if onboarding is your bottleneck

If your comparisons keep coming down to “who can launch fastest without messy access sharing,” consider adding a dedicated onboarding layer.

Connexify is designed to streamline client onboarding for agencies and service providers by enabling fast, secure account access setup through a single, branded link, cutting manual steps and reducing onboarding time from days to seconds. If you want to see whether it fits your workflow, you can book a demo or start with the 14-day free trial (available on the site).